Table of Contents

- CKS certification is one of the demanding certification in the DevOps world.

- In this article, we have consolidated questions based on the topics

- As we don't yet offer sandboxes for writing this mock test, time yourself for checking your speed.

- The questions may follow the order of syllabus. But in actual exam, questions can come in any order and some individual questions will check knowledge of multiple areas are seen.

- If you are not able to perform the task mentioned in the question, go through the answer and retry again.

- During the Exam, we are allowed to access Kubernetes Documentation. So navigate through documentation as and when you are blocked

- Apart from the official kubernetes docs, we are allowed to go through the these documentations which are limited to Trivy, Falco, etcd, App Armor

CKS Exam Questions on Cluster Setup

Question 1

Create a network policy that denies egress traffic to any domain outside of the cluster. The network policy applies to Pods with the label app=backend and also allows egress traffic for port 53 for UDP and TCP to Pods in any other namespace.

Solution

Create a file with the name deny-egress-external.yaml for defining the network policy. The network policy needs to set the Pod selector to app=backend and define the Egress policy type. Make sure to allow the port 53 for the protocols UDP and TCP. The namespace selector for the egress policy needs to use {} to select all namespaces

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-egress-external

spec:

podSelector:

matchLabels:

app: backend

policyTypes:

- Egress

egress:

- to:

- namespaceSelector: {}

ports:

- port: 53

protocol: UDP

- port: 53

protocol: TCPRun the apply command to instantiate the network policy object from the YAML file:

$ kubectl apply -f deny-egress-external.yaml

Question 2

Create a Pod named allowed that runs the busybox:1.36.0 image on port 80 and assign it the label app=frontend. Make a curl call to http://google.com. The network call should be allowed, as the network policy doesn’t apply to the Pod.

Solution

A Pod that does not match the label selection of the network policy can make a call to a URL outside of the cluster. In this case, the label assignment is app=frontend:

$ kubectl run web --image=busybox:1.36.0 -l app=frontend --port=80 -it --rm --restart=Never -- wget http://google.com --timeout=5 --tries=1

Connecting to google.com (142.250.69.238:80)

Connecting to www.google.com (142.250.72.4:80)

saving to /'index.html'

index.html 100% |**| 13987 \

0:00:00 ETA

/'index.html' saved

pod "web" deleted

Question 3

Create another Pod named denied that runs the busybox:1.36.0 image on port 80 and assign it the label app=backend. Make a curl call to http://google.com. The network call should be blocked.

Solution

A Pod that does match the label selection of the network policy cannot make a call to a URL outside of the cluster. In this case, the label assignment is app=backend:

$ kubectl run web --image=busybox:1.36.0 -l app=backend --port=80 -it --rm --restart=Never -- wget http://google.com --timeout=5 --tries=1

wget: download timed out

pod "web" deleted

pod default/web terminated (Error)

Question 4

Install the Kubernetes Dashboard or make sure that it is already installed. In the namespace kubernetes-dashboard, create a ServiceAccount named observer-user. Moreover, create the corresponding ClusterRole and ClusterRoleBinding. The ServiceAccount should only be allowed to view Deployments. All other operations should be denied. As an example, create the Deployment named deploy in the default namespace with the following command: kubectl create deployment deploy --image=nginx --replicas=3.

Solution

First, see if the Dashboard is already installed. You can check the namespace the Dashboard usually creates:

$ kubectl get ns kubernetes-dashboard

NAME STATUS AGE

kubernetes-dashboard Active 109s

If the namespace does not exist, you can assume that the Dashboard has not been installed yet. Install it with the following command:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.0/aio/deploy/recommended.yaml

Create the ServiceAccount, ClusterRole, and ClusterRoleBinding. Make sure that the ClusterRole only allows listing Deployment objects. The following YAML manifest has been saved in the file dashboard-observer-user.yaml:

apiVersion: v1

kind: ServiceAccount

metadata:

name: observer-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

name: cluster-observer

rules:

- apiGroups:

- 'apps'

resources:

- 'deployments'

verbs:

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: observer-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-observer

subjects:

- kind: ServiceAccount

name: observer-user

namespace: kubernetes-dashboardCreate the objects with the following command:

$ kubectl apply -f dashboard-observer-user.yaml

Question 5

Create a token for the ServiceAccount named observer-user that will never expire. Log into the Dashboard using the token. Ensure that only Deployments can be viewed and not any other type of resource.

Solution

Run the following command to create a token for the ServiceAccount. The option --duration 0s ensures that the token will never expire. Copy the token that was rendered in the console output of the command:

$ kubectl create token observer-user -n kubernetes-dashboard --duration 0s

eyJhbGciOiJSUzI1NiIsImtpZCI6Ik5lNFMxZ1...

Run the proxy command and open the link http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/ https:kubernetes-dashboard:/proxy in a browser:

$ kubectl proxy

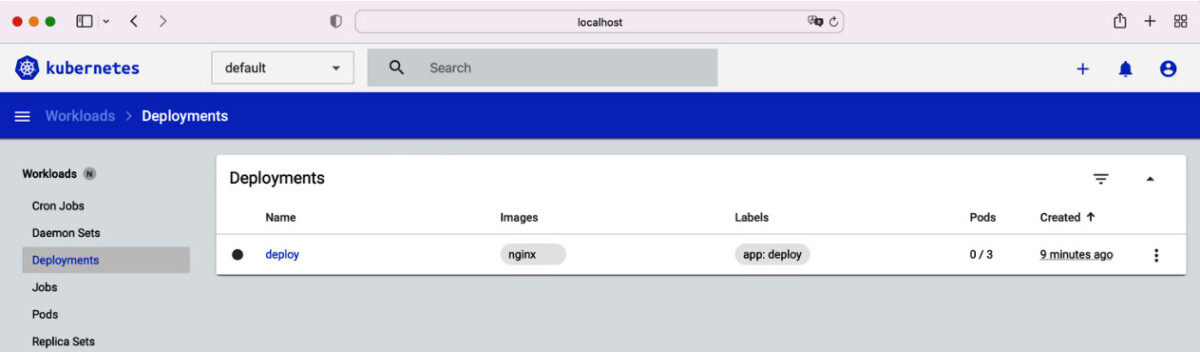

Select the “Token” authentication method and paste the token you copied before. Sign into the Dashboard. You should see that only Deployment objects are listable as shown in the below snippet

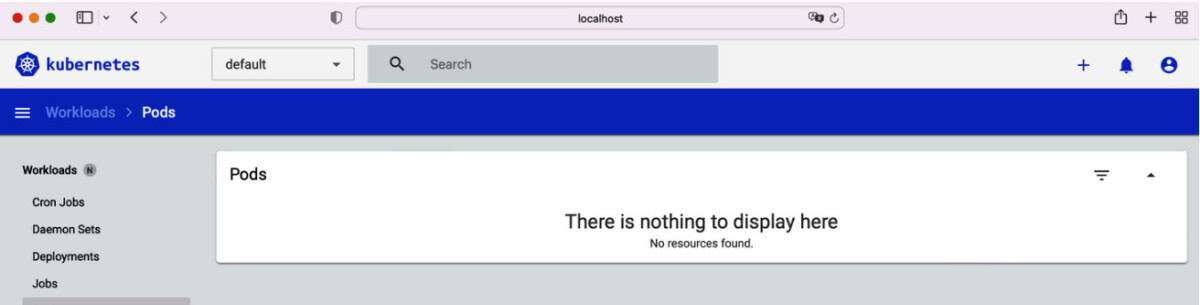

All other objects will say “There is nothing to display here.” when we try to list the pods. Below figure shows the same.

Question 6

Download the binary file of the API server with version 1.26.1 on Linux AMD64. Download the SH256 checksum file for the API-server executable of version 1.23.1. Run the OS-specific verification tool and observe the result.

Solution

Download the API server binary with the following command:

$ curl -LO "https://dl.k8s.io/v1.26.1/bin/linux/amd64/kube-apiserver"

Next, download the SHA256 file for the same binary, but a different version. The following command downloads the file for version 1.23.1:

$ curl -LO "https://dl.k8s.io/v1.23.1/bin/linux/amd64/kube-apiserver.sha256"

Comparing the binary file with the checksum file results in a failure, as the versions do not match:

$ echo "$(cat kube-apiserver.sha256) kube-apiserver" | shasum -a 256 --check

kube-apiserver: FAILED

shasum: WARNING: 1 computed checksum did NOT match

CKS Exam QuestionsCluster Hardening

Question 7

Create a client certificate and key for the user named jill in the group observer. With the admin context, create the context for the user jill.

Solution

Create a private key using the openssl executable. Provide an expressive file name, such as jill.key. The -subj option provides the username (CN) and the group (O). The following command uses the username jill and the group named observer:

$ openssl genrsa -out jill.key 2048 $ openssl req -new -key jill.key -out jill.csr -subj "/CN=jill/O=observer"

Retrieve the base64-encoded value of the CSR file content with the following command. You will need it when creating a the CertificateSigningRequest object in the next step:

$ cat jill.csr | base64 | tr -d "\n"

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tL...

The following script creates a CertificateSigningRequest object:

$ cat <<EOF | kubectl apply -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: jill

spec:

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tL...

signerName: kubernetes.io/kube-apiserver-client

expirationSeconds: 86400

usages:

- client auth

EOFUse the certificate approve command to approve the signing request and export the issued certificate:

$ kubectl certificate approve jill $ kubectl get csr jill -o jsonpath={.status.certificate}| base64 -d > jill.crt

Add the user to the kubeconfig file and add the context for the user. The cluster name used here is minikube. It might be different for your Kubernetes environment:

$ kubectl config set-credentials jill --client-key=jill.key --client-certificate=jill.crt --embed-certs=true $ kubectl config set-context jill --cluster=minikube --user=jill

Question 8

For the group (not the user!), define a Role and RoleBinding in the default namespace that allow the verbs get, list, and watch for the resources Pods, ConfigMaps, and Secrets. Create the objects.

Solution

Create the Role and RoleBinding. The following imperative commands assign the verbs get, list, and watch for Pods, ConfigMaps, and Secrets to the subject named observer of type group. The user jill is part of the group:

$ kubectl create role observer --verb=create --verb=get --verb=list --verb=watch --resource=pods --resource=configmaps --resource=secrets $ kubectl create rolebinding observer-binding --role=observer --group=observer

Question 9

Switch to the user context and execute a kubectl command that allows one of the granted operations, and one kubectl command that should not be permitted. Switch back to the admin context.

Solution

Switch to the user context:

$ kubectl config use-context jill

We’ll pick one permitted operation, listing ConfigMap objects. The user is authorized to map the call:

$ kubectl get configmaps

NAME DATA AGE

kube-root-ca.crt 1 16m

Listing nodes won’t be authorized. The user does not have the appropriate permissions:

$ kubectl get nodes

Error from server (Forbidden): nodes is forbidden: User "jill" cannot list resource "nodes" in API group "" at the cluster scope

Switch back to the admin context:

$ kubectl config use-context minikube

Question 10

Create a Pod named service-list in the namespace t23. The container uses the image alpine/curl:3.14 and makes a curl call to the Kubernetes API that lists Service objects in the default namespace in an infinite loop. Create and attach the service account api-call. Inspect the container logs after the Pod has been started. What response do you expect to see from the curl command?

Solution

Create the namespace t23:

$ kubectl create namespace t23

Create the service account api-call in the namespace:

$ kubectl create serviceaccount api-call -n t23

Define a YAML manifest file with the name pod.yaml. The contents of the file define a Pod that makes an HTTPS GET call to the API server to retrieve the list of Services in the default namespace:

apiVersion: v1

kind: Pod

metadata:

name: service-list

namespace: t23

spec:

serviceAccountName: api-call

containers:

- name: service-list

image: alpine/curl:3.14

command: ['sh', '-c', 'while true; do curl -s -k -m 5 \

-H "Authorization: Bearer $(cat /var/run/secrets/\

kubernetes.io/serviceaccount/token)" https://kubernetes.\

default.svc.cluster.local/api/v1/namespaces/default/\

services; sleep 10; done']Create the Pod with the following command:

$ kubectl apply -f pod.yaml

Check the logs of the Pod. The API call is not authorized, as shown in the following log output:

$ kubectl logs service-list -n t23

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "services is forbidden: User \"system:serviceaccount:t23 \

:api-call\" cannot list resource \"services\" in API \

group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "services"

},

"code": 403

}

Question 11

Assign a ClusterRole and RoleBinding to the service account that only allows the operation needed by the Pod. Have a look at the response from the curl command.

Solution

Create the YAML manifest in the file clusterrole.yaml, as shown in the following:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: list-services-clusterrole

rules:

- apiGroups: [""]

resources: ["services"]

verbs: ["list"]Reference the ClusterRole in a RoleBinding defined in the file rolebinding.yaml. The subject should list the service account api-call in the namespace t23:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: serviceaccount-service-rolebinding

subjects:

- kind: ServiceAccount

name: api-call

namespace: t23

roleRef:

kind: ClusterRole

name: list-services-clusterrole

apiGroup: rbac.authorization.k8s.ioCreate both objects from the YAML manifests:

$ kubectl apply -f clusterrole.yaml $ kubectl apply -f rolebinding.yaml

The API call running inside of the container should now be authorized and be allowed to list the Service objects in the default namespace. As shown in the following output, the namespace currently hosts at least one Service object, the kubernetes.default Service:

$ kubectl logs service-list -n t23

{

"kind": "ServiceList",

"apiVersion": "v1",

"metadata": {

"resourceVersion": "1108"

},

"items": [

{

"metadata": {

"name": "kubernetes",

"namespace": "default",

"uid": "30eb5425-8f60-4bb7-8331-f91fe0999e20",

"resourceVersion": "199",

"creationTimestamp": "2022-09-08T18:06:52Z",

"labels": {

"component": "apiserver",

"provider": "kubernetes"

},

...

}

]

}Question 12

Configure the Pod so that automounting of the service account token is disabled. Retrieve the token value and use it directly with the curl command. Make sure that the curl command can still authorize the operation.

Solution

Create the token for the service account using the following command:

$ kubectl create token api-call -n t23

eyJhbGciOiJSUzI1NiIsImtpZCI6IjBtQkJzVWlsQjl...

Change the existing Pod definition by deleting and recreating the live object. Add the attribute that disables automounting the token, as shown in the following:

apiVersion: v1

kind: Pod

metadata:

name: service-list

namespace: t23

spec:

serviceAccountName: api-call

automountServiceAccountToken: false

containers:

- name: service-list

image: alpine/curl:3.14

command: ['sh', '-c', 'while true; do curl -s -k -m 5 \

-H "Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6Ij \

BtQkJzVWlsQjl" https://kubernetes.default.svc.cluster. \

local/api/v1/namespaces/default/services; sleep 10; \

done']The API server will allow the HTTPS request performed with the token of the service account to be authenticated and authorized:

$ kubectl logs service-list -n t23

{

"kind": "ServiceList",

"apiVersion": "v1",

"metadata": {

"resourceVersion": "81194"

},

"items": [

{

"metadata": {

"name": "kubernetes",

"namespace": "default",

"uid": "30eb5425-8f60-4bb7-8331-f91fe0999e20",

"resourceVersion": "199",

"creationTimestamp": "2022-09-08T18:06:52Z",

"labels": {

"component": "apiserver",

"provider": "kubernetes"

},

...

}

]

}Question 13

Navigate to the directory app-a/ch03/upgrade-version of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. Upgrade all nodes of the cluster from Kubernetes 1.25.6 to 1.26.1. The cluster consists of a single control plane node named kube-control-plane, and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Solution

The solution to this sample exercise requires a lot of manual steps. The following commands do not render their output.

Open an interactive shell to the control plane node using Vagrant:

$ vagrant ssh kube-control-plane

Upgrade kubeadm to version 1.26.1 and apply it:

$ sudo apt-mark unhold kubeadm && sudo apt-get update && sudo apt-get install -y kubeadm=1.26.1-00 && sudo apt-mark hold kubeadm $ sudo kubeadm upgrade apply v1.26.1

Drain the node, upgrade the kubelet and kubectl, restart the kubelet, and uncordon the node:

$ kubectl drain kube-control-plane --ignore-daemonsets $ sudo apt-get update && sudo apt-get install -y --allow-change-held-packages kubelet=1.26.1-00 kubectl=1.26.1-00 $ sudo systemctl daemon-reload $ sudo systemctl restart kubelet $ kubectl uncordon kube-control-plane

The version of the node should now say v1.26.1. Exit the node:

$ kubectl get nodes $ exit

Open an interactive shell to the first worker node using Vagrant. Repeat all of the following steps for the worker node:

$ vagrant ssh kube-worker-1

Upgrade kubeadm to version 1.26.1 and apply it to the node:

$ sudo apt-get update && sudo apt-get install -y --allow-change-held-packages kubeadm=1.26.1-00 $ sudo kubeadm upgrade node

Drain the node, upgrade the kubelet and kubectl, restart the kubelet, and uncordon the node:

$ kubectl drain kube-worker-1 --ignore-daemonsets $ sudo apt-get update && sudo apt-get install -y --allow-change-held-packages kubelet=1.26.1-00 kubectl=1.26.1-00 $ sudo systemctl daemon-reload $ sudo systemctl restart kubelet $ kubectl uncordon kube-worker-1

The version of the node should now say v1.26.1. Exit out of the node:

$ kubectl get nodes $ exit

System Hardening

Question 14

Navigate to the directory app-a/ch04/close-ports of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

Identify the process listening on port 21 in the VM kube-worker-1. You decided not to expose this port to reduce the risk of attackers exploiting the port. Close the port by shutting down the corresponding process.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Solution

Shell into the worker node with the following command:

$ vagrant ssh kube-worker-1

Identify the process exposing port 21. One way to do this is by using the lsof command. The command that exposes the port is vsftpd:

$ sudo lsof -i :21

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

vsftpd 10178 root 3u IPv6 56850 0t0 TCP *:ftp (LISTEN)

Alternatively, you could also use the ss command, as shown in the following:

$ sudo ss -at -pn '( dport = :21 or sport = :21 )'

State Recv-Q Send-Q Local Address:Port \

Peer Address:Port Process

LISTEN 0 32 *:21 \

*:* users:(("vsftpd",pid=10178,fd=3))

The process vsftpd has been started as a service:

$ sudo systemctl status vsftpd

● vsftpd.service - vsftpd FTP server

Loaded: loaded (/lib/systemd/system/vsftpd.service; enabled; \

vendor preset: enabled)

Active: active (running) since Thu 2022-10-06 14:39:12 UTC; \

11min ago

Main PID: 10178 (vsftpd)

Tasks: 1 (limit: 1131)

Memory: 604.0K

CGroup: /system.slice/vsftpd.service

└─10178 /usr/sbin/vsftpd /etc/vsftpd.conf

Oct 06 14:39:12 kube-worker-1 systemd[1]: Starting vsftpd FTP server...

Oct 06 14:39:12 kube-worker-1 systemd[1]: Started vsftpd FTP server.

Shut down the service and deinstall the package:

$ sudo systemctl stop vsftpd $ sudo systemctl disable vsftpd $ sudo apt purge --auto-remove -y vsftpd

Checking on the port, you will see that it is not listed anymore:

$ sudo lsof -i :21

Exit out of the node:

$ exit

Question 15

Navigate to the directory app-a/ch04/apparmor of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane, and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

Create an AppArmor profile named network-deny. The profile should prevent any incoming and outgoing network traffic. Add the profile to the set of AppArmor rules in enforce mode. Apply the profile to the Pod named network-call running in the default namespace. Check the logs of the Pod to ensure that network calls cannot be made anymore.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Solution

Shell into the worker node with the following command:

$ vagrant ssh kube-worker-1

Create the AppArmor profile at /etc/apparmor.d/network-deny using the command sudo vim /etc/apparmor.d/network-deny. The contents of the file should look as follows:

#include <tunables/global>

profile network-deny flags=(attach_disconnected) {

#include <abstractions/base>

network,

}

Enforce the AppArmor profile by running the following command:

$ sudo apparmor_parser /etc/apparmor.d/network-deny

You cannot modify the existing Pod object in order to add the annotation for AppArmor. You will need to delete the object first. Write the definition of the Pod to a file:

$ kubectl get pod -o yaml > pod.yaml $ kubectl delete pod network-call

Edit the pod.yaml file to add the AppArmor annotation. For the relevant annotation, use the name of the container network-call as part of the key suffix and localhost/network-deny as the value. The suffix network-deny refers to the name of the AppArmor profile. The final content could look as follows after a little bit of cleanup:

apiVersion: v1

kind: Pod

metadata:

name: network-call

annotations:

container.apparmor.security.beta.kubernetes.io/network-call: \

localhost/network-deny

spec:

containers:

- name: network-call

image: alpine/curl:3.14

command: ["sh", "-c", "while true; do ping -c 1 google.com; \

sleep 5; done"]Create the Pod from the manifest. After a couple of seconds, the Pod should transition into the “Running” status:

$ kubectl create -f pod.yaml $ kubectl get pod network-call NAME READY STATUS RESTARTS AGE network-call 1/1 Running 0 27s

AppArmor prevents the Pod from making a network call. You can check the logs to verify:

$ kubectl logs network-call

...

sh: ping: Permission denied

sh: sleep: Permission denied

Exit out of the node:

$ exit

Question 16

Navigate to the directory app-a/ch04/seccomp of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane, and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

Create a seccomp profile file named audit.json that logs all syscalls in the standard seccomp directory. Apply the profile to the Pod named network-call running in the default namespace. Check the log file /var/log/syslog for log entries.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Solution

Shell into the worker node with the following command:

$ vagrant ssh kube-worker-1

Create the target directory for the seccomp profiles:

$ sudo mkdir -p /var/lib/kubelet/seccomp/profiles

Add the file audit.json in the directory /var/lib/kubelet/seccomp/profiles with the following content:

{

"defaultAction": "SCMP_ACT_LOG"

}

You cannot modify the existing Pod object in order to add the seccomp configuration via the security context. You will need to delete the object first. Write the definition of the Pod to a file:

$ kubectl get pod -o yaml > pod.yaml $ kubectl delete pod network-call

Edit the pod.yaml file. Point the seccomp profile to the definition. The final content could look as follows after a little bit of cleanup:

apiVersion: v1

kind: Pod

metadata:

name: network-call

spec:

securityContext:

seccompProfile:

type: Localhost

localhostProfile: profiles/audit.json

containers:

- name: network-call

image: alpine/curl:3.14

command: ["sh", "-c", "while true; do ping -c 1 google.com; \

sleep 5; done"]

securityContext:

allowPrivilegeEscalation: falseCreate the Pod from the manifest. After a couple of seconds, the Pod should transition into the “Running” status:

$ kubectl create -f pod.yaml $ kubectl get pod network-call NAME READY STATUS RESTARTS AGE network-call 1/1 Running 0 27s

You should be able to find log entries for syscalls, e.g., for the sleep command:

$ sudo cat /var/log/syslog

Oct 6 16:25:06 ubuntu-focal kernel: [ 2114.894122] audit: type=1326 audit(1665073506.099:23761): auid=4294967295 uid=0 gid=0 ses=4294967295 pid=19226 comm="sleep" exe="/bin/busybox" sig=0 arch=c000003e syscall=231 compat=0 ip=0x7fc026adbf0b code=0x7ffc0000

Exit out of the node:

$ exit.

Question 17

Create a new Pod named sysctl-pod with the image nginx:1.23.1. Set the sysctl parameters net.core.somaxconn to 1024 and debug.iotrace to 1. Check on the status of the Pod.

Solution

Create the Pod definition in the file pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: sysctl-pod

spec:

securityContext:

sysctls:

- name: net.core.somaxconn

value: "1024"

- name: debug.iotrace

value: "1"

containers:

- name: nginx

image: nginx:1.23.1Create the Pod and then check on the status. You will see that the status is “SysctlForbidden”:

$ kubectl create -f pod.yaml $ kubectl get pods NAME READY STATUS RESTARTS AGE sysctl-pod 0/1 SysctlForbidden 0 4s

The event log will tell you more about the reasoning:

$ kubectl describe pod sysctl-pod

...

Events:

Type Reason Age From \

Message

---- ------ ---- ---- \

-------

Warning SysctlForbidden 2m48s kubelet \

forbidden sysctl: "net.core.somaxconn" \

not allowlisted

Minimize Microservice Vulnerabilities

Question 18

Create a Pod named busybox-security-context with the container image busybox:1.28 that runs the command sh -c sleep 1h. Add a Volume of type emptydir and mount it to the path /data/test. Configure a security context with the following attributes: runAsUser: 1000, runAsGroup: 3000, and fsGroup: 2000. Furthermore, set the attribute allowPrivilegeEscalation to false.

Shell into the container, navigate to the directory /data/test, and create the file named hello.txt. Check the group assigned to the file. What’s the value? Exit out of the container.

Solution

Define the Pod with the security settings in the file busybox-security-context.yaml. You can find the content of the following YAML manifest:

apiVersion: v1

kind: Pod

metadata:

name: busybox-security-context

spec:

securityContext:

runAsUser: 1000

runAsGroup: 3000

fsGroup: 2000

volumes:

- name: vol

emptyDir: {}

containers:

- name: busybox

image: busybox:1.28

command: ["sh", "-c", "sleep 1h"]

volumeMounts:

- name: vol

mountPath: /data/test

securityContext:

allowPrivilegeEscalation: falseCreate the Pod with the following command:

$ kubectl apply -f busybox-security-context.yaml $ kubectl get pod busybox-security-context NAME READY STATUS RESTARTS AGE busybox-security-context 1/1 Running 0 54s

Shell into the container and create the file. You will find that the file group is 2000, as defined by the security context:

$ kubectl exec busybox-security-context -it -- /bin/sh

/ $ cd /data/test

/data/test $ touch hello.txt

/data/test $ ls -l

total 0

-rw-r--r-- 1 1000 2000 0 Nov 21 18:29 hello.txt

/data/test $ exit

Question 19

Create a Pod Security Admission (PSA) rule. In the namespace called audited, create a Pod Security Standard (PSS) with the level baseline that should be rendered to the console.

Try to create a Pod in the namespace that violates the PSS and produces a message on the console log. You can provide any name, container image, and security configuration you like. Will the Pod be created? What PSA level needs to be configured to prevent the creation of the Pod?

Solution

Specify the namespace named audited in the file psa-namespace.yaml. Set the PSA label with baseline level and the warn mode:

apiVersion: v1

kind: Namespace

metadata:

name: audited

labels:

pod-security.kubernetes.io/warn: baselineCreate the namespace from the YAML manifest:

$ kubectl apply -f psa-namespace.yaml

You can produce an error by using the following Pod configuration in the file psa-pod.yaml. The YAML manifest sets the attribute hostNetwork: true, which is not allowed for the baseline level:

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: audited

spec:

hostNetwork: true

containers:

- name: busybox

image: busybox:1.28

command: ["sh", "-c", "sleep 1h"]Creating the Pod renders a warning message. The Pod will have been created nevertheless. You can prevent the creation of the Pod by configuring the PSA with the restricted level:

$ kubectl apply -f psa-pod.yaml

Warning: would violate PodSecurity "baseline:latest": host namespaces \

(hostNetwork=true)

pod/busybox created

$ kubectl get pod busybox -n audited

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 2m21s

Question 20

Install Gatekeeper on your cluster. Create a Gatekeeper ConstraintTemplate object that defines the minimum and maximum number of replicas controlled by a ReplicaSet. Instantiate a Constraint object that uses the ConstraintTemplate. Set the minimum number of replicas to 3 and the maximum number to 10.

Create a Deployment object that sets the number of replicas to 15. Gatekeeper should not allow the Deployment, ReplicaSet, and Pods to be created. An error message should be rendered. Try again to create the Deployment object but with a replica number of 7. Verify that all objects have been created successfully.

Solution

You can install Gatekeeper with the following command:

$ kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/master/deploy/gatekeeper.yaml

The Gatekeeper library describes a ConstraintTemplate for defining replica limits. Inspect the YAML manifest described on the page. Apply the manifest with the following command:

$ kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper-library/master/library/general/replicalimits/template.yaml

Now, define the Constraint with the YAML manifest in the file named replica-limits-constraint.yaml:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sReplicaLimits

metadata:

name: replica-limits

spec:

match:

kinds:

- apiGroups: ["apps"]

kinds: ["Deployment"]

parameters:

ranges:

- min_replicas: 3

max_replicas: 10Create the Constraint with the following command:

$ kubectl apply -f replica-limits-constraint.yaml

You can see that a Deployment can only be created if the provided number of replicas falls within the range of the Constraint:

$ kubectl create deployment nginx --image=nginx:1.23.2 --replicas=15

error: failed to create deployment: admission webhook \

"validation.gatekeeper.sh" denied the request: [replica-limits] \

The provided number of replicas is not allowed for deployment: nginx. \

Allowed ranges: {"ranges": [{"max_replicas": 10, "min_replicas": 3}]}

$ kubectl create deployment nginx --image=nginx:1.23.2 --replicas=7

deployment.apps/nginx created

Question 21

Configure encryption for etcd using the aescbc provider. Create a new Secret object of type Opaque. Provide the key-value pair api-key=YZvkiWUkycvspyGHk3fQRAkt. Query for the value of the Secret using etcdctl. What’s the encrypted value?

Solution

Configure encryption for etcd, as described in “Encrypting etcd Data”. Next, create a new Secret with the following imperative command:

$ kubectl create secret generic db-credentials --from-literal=api-key=YZvkiWUkycvspyGHk3fQRAkt

You can check the encrypted value of the Secret stored in etcd with the following command:

$ sudo ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get /registry/secrets/default/db-credentials | hexdump -C

Question 22

Navigate to the directory app-a/ch05/gvisor of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

gVisor has been installed in the VM kube-worker-1. Shell into the VM and create a RuntimeClass object named container-runtime-sandbox with runsc as the handler. Then create a Pod with the name nginx and the container image nginx:1.23.2 and assign the RuntimeClass to it.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Open an interactive shell to the worker node using Vagrant:

$ vagrant ssh kube-worker-1

Define the RuntimeClass with the following YAML manifest. The contents have been stored in the file runtime-class.yaml:

apiVersion: node.k8s.io/v1 kind: RuntimeClass metadata: name: container-runtime-sandbox handler: runsc

Create the RuntimeClass object:

$ kubectl apply -f runtime-class.yaml

Assign the name of the RuntimeClass to the Pod using the spec.runtimeClassName attribute. The nginx Pod has been defined in the file pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

runtimeClassName: container-runtime-sandbox

containers:

- name: nginx

image: nginx:1.23.2Create the Pod object. The Pod will transition into the status “Running”:

$ kubectl apply -f pod.yaml $ kubectl get pod nginx NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 2m21s

Exit out of the node:

$ exit

Supply Chain Security

Question 23

Have a look at the following Dockerfile. Can you identify possibilities for reducing the footprint of the produced container image?

FROM node:latest ENV NODE_ENV development WORKDIR /app COPY package.json . RUN npm install COPY . . EXPOSE 3001 CMD ["node", "app.js"]

Apply Dockerfile best practices to optimize the container image footprint. Run the docker build command before and after making optimizations. The resulting size of the container image should be smaller but the application should still be functioning.

Solution

The initial container image built with the provided Dockerfile has a size of 998MB. You can produce and run the container image with the following commands. Run a quick curl command to see if the endpoint exposed by the application can be reached:

$ docker build . -t node-app:0.0.1 ... $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE node-app 0.0.1 7ba99d4ba3af 3 seconds ago 998MB $ docker run -p 3001:3001 -d node-app:0.0.1 c0c8a301eeb4ac499c22d10399c424e1063944f18fff70ceb5c49c4723af7969 $ curl -L http://localhost:3001/ Hello World

One of the changes you can make is to avoid using a large base image. You could replace it with the alpine version of the node base image. Also, avoid pulling the latest image. Pick the Node.js version you actually want the application to run with. The following command uses a Dockerfile with the base image node:19-alpine, which reduces the container image size to 176MB:

$ docker build . -t node-app:0.0.1 ... $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE node-app 0.0.1 ef2fbec41a75 2 seconds ago 176MB

Question 24

Install Kyverno in your Kubernetes cluster. You can find installation instructions in the documentation.

Apply the “Restrict Image Registries” policy described on the documentation page. The only allowed registry should be gcr.io. Usage of any other registry should be denied.

Create a Pod that defines the container image gcr.io/google-containers/busybox:1.27.2. Creation of the Pod should fail. Create another Pod using the container image busybox:1.27.2. Kyverno should allow the Pod to be created.

Solution

You can install Kyverno using Helm or by pointing to the YAML manifest available on the project’s GitHub repository. We’ll use the YAML manifest here:

$ kubectl create -f https://raw.githubusercontent.com/kyverno/kyverno/main/config/install.yaml

Set up a YAML manifest file named restrict-image-registries.yaml. Add the following contents to the file. The manifest represents a ClusterPolicy that only allows the use of container images that start with gcr.io/. Make sure to assign the value Enforce to the attribute spec.validationFailureAction:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: restrict-image-registries

annotations:

policies.kyverno.io/title: Restrict Image Registries

policies.kyverno.io/category: Best Practices, EKS Best Practices

policies.kyverno.io/severity: medium

policies.kyverno.io/minversion: 1.6.0

policies.kyverno.io/subject: Pod

policies.kyverno.io/description: >-

Images from unknown, public registries can be of dubious quality \

and may not be scanned and secured, representing a high degree of \

risk. Requiring use of known, approved registries helps reduce \

threat exposure by ensuring image pulls only come from them. This \

policy validates that container images only originate from the \

registry `eu.foo.io` or `bar.io`. Use of this policy requires \

customization to define your allowable registries.

spec:

validationFailureAction: Enforce

background: true

rules:

- name: validate-registries

match:

any:

- resources:

kinds:

- Pod

validate:

message: "Unknown image registry."

pattern:

spec:

containers:

- image: "gcr.io/*"Apply the manifest with the following command:

$ kubectl apply -f restrict-image-registries.yaml

Run the following commands to verify that the policy has become active. Any container image definition that doesn’t use the prefix gcr.io/ will be denied:

$ kubectl run nginx --image=nginx:1.23.3 Error from server: admission webhook "validate.kyverno.svc-fail" \ denied the request: policy Pod/default/nginx for resource violation: restrict-image-registries: validate-registries: 'validation error: Unknown image registry. \ rule validate-registries failed at path /spec/containers/0/image/' $ kubectl run busybox --image=gcr.io/google-containers/busybox:1.27.2 pod/busybox created

Question 25

Define a Pod using the container image nginx:1.23.3-alpine in the YAML manifest pod-validate-image.yaml. Retrieve the digest of the container image from Docker Hub. Validate the container image contents using the SHA256 hash. Create the Pod. Kubernetes should be able to successfully pull the container image.

Solution

Find the SHA256 hash for the image nginx:1.23.3-alpine with the search functionality of Docker Hub. The search result will lead you to the tag of the image. On top of the page, you should find the digest sha256:c1b9fe3c0c015486cf1e4a0ecabe78d05864475e279638e9713eb55f013f907f. Use the digest instead of the tag in the Pod definition. The result is the following YAML manifest:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx@sha256:c1b9fe3c0c015486cf1e4a0ecabe78d05864475e279638 \

e9713eb55f013f907fThe creation of the Pod should work:

$ kubectl apply -f pod-validate-image.yaml pod/nginx created $ kubectl get pods nginx NAME READY STATUS RESTARTS AGE nginx 1/1 Running 0 29s

If you modify the SHA256 hash in any form and try to recreate the Pod, then Kubernetes would not allow you to pull the image.

Question 26

Use Kubesec to analyze the following contents of the YAML manifest file pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: hello-world

spec:

securityContext:

runAsUser: 0

containers:

- name: linux

image: hello-world:linuxInspect the suggestions generated by Kubesec. Ignore the suggestions on seccomp and AppArmor. Fix the root cause of all messages so that another execution of the tool will not report any additional suggestions.

Solution

Running Kubesec in a Docker container results in a whole bunch of suggestions, as shown in the following output:

$ docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin <; pod.yaml

[

{

"object": "Pod/hello-world.default",

"valid": true,

"message": "Passed with a score of 0 points",

"score": 0,

"scoring": {

"advise": [

{

"selector": "containers[] .securityContext .capabilities \

.drop | index(\"ALL\")",

"reason": "Drop all capabilities and add only those \

required to reduce syscall attack surface"

},

{

"selector": "containers[] .resources .requests .cpu",

"reason": "Enforcing CPU requests aids a fair balancing \

of resources across the cluster"

},

{

"selector": "containers[] .securityContext .runAsNonRoot \

== true",

"reason": "Force the running image to run as a non-root \

user to ensure least privilege"

},

{

"selector": "containers[] .resources .limits .cpu",

"reason": "Enforcing CPU limits prevents DOS via resource \

exhaustion"

},

{

"selector": "containers[] .securityContext .capabilities \

.drop",

"reason": "Reducing kernel capabilities available to a \

container limits its attack surface"

},

{

"selector": "containers[] .resources .requests .memory",

"reason": "Enforcing memory requests aids a fair balancing \

of resources across the cluster"

},

{

"selector": "containers[] .resources .limits .memory",

"reason": "Enforcing memory limits prevents DOS via resource \

exhaustion"

},

{

"selector": "containers[] .securityContext \

.readOnlyRootFilesystem == true",

"reason": "An immutable root filesystem can prevent malicious \

binaries being added to PATH and increase attack \

cost"

},

{

"selector": ".metadata .annotations .\"container.seccomp. \

security.alpha.kubernetes.io/pod\"",

"reason": "Seccomp profiles set minimum privilege and secure \

against unknown threats"

},

{

"selector": ".metadata .annotations .\"container.apparmor. \

security.beta.kubernetes.io/nginx\"",

"reason": "Well defined AppArmor policies may provide greater \

protection from unknown threats. WARNING: NOT \

PRODUCTION READY"

},

{

"selector": "containers[] .securityContext .runAsUser -gt \

10000",

"reason": "Run as a high-UID user to avoid conflicts with \

the host's user table"

},

{

"selector": ".spec .serviceAccountName",

"reason": "Service accounts restrict Kubernetes API access \

and should be configured with least privilege"

}

]

}

}

]

The fixed-up YAML manifest could look like this:

apiVersion: v1

kind: Pod

metadata:

name: hello-world

spec:

serviceAccountName: default

containers:

- name: linux

image: hello-world:linux

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 20000

capabilities:

drop: ["ALL"]Question 27

Navigate to the directory app-a/ch06/trivy of the checked-out GitHub repository bmuschko/cks-study-guide. Execute the command kubectl apply -f setup.yaml.

The command creates three different Pods in the namespace r61. From the command line, execute Trivy against the container images used by the Pods. Delete all Pods that have “CRITICAL” vulnerabilities. Which of the Pods are still running?

Solution

Executing the kubectl apply command against the existing setup.yaml manifest will create the Pods named backend, loop, and logstash in the namespace r61:

$ kubectl apply -f setup.yaml

namespace/r61 created

pod/backend created

pod/loop created

pod/logstash created

You can check on them with the following command:

$ kubectl get pods -n r61

NAME READY STATUS RESTARTS AGE

backend 1/1 Running 0 115s

logstash 1/1 Running 0 115s

loop 1/1 Running 0 115s

Check the images of each Pod in the namespace r61 using the kubectl describe command. The images used are bmuschko/nodejs-hello-world:1.0.0, alpine:3.13.4, and elastic/logstash:7.13.3:

$ kubectl describe pod backend -n r61

...

Containers:

hello:

Container ID: docker://eb0bdefc75e635d03b625140d1e \

b229ca2db7904e44787882147921c2bd9c365

Image: bmuschko/nodejs-hello-world:1.0.0

...

Use the Trivy executable to check vulnerabilities for all images:

$ trivy image bmuschko/nodejs-hello-world:1.0.0 $ trivy image alpine:3.13.4 $ trivy image elastic/logstash:7.13.3

If you look closely at the list of vulnerabilities, you will find that all images contain issues with “CRITICAL” severity. As a result, delete all Pods:

$ kubectl delete pod backend -n r61 $ kubectl delete pod logstash -n r61 $ kubectl delete pod loop -n r61

Monitoring, Logging And Runtime Security

Question 28

Navigate to the directory app-a/ch07/falco of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f. Falco is already running as a systemd service.

Inspect the process running in the existing Pod named malicious. Have a look at the Falco logs and see if a rule created a log for the process.

Reconfigure the existing rule that creates a log for the event by changing the output to <timestamp>,<username>,<container-id>. Find the changed log entry in the Falco logs.

Reconfigure Falco to write logs to the file at /var/logs/falco.log. Disable the standard output channel. Ensure that Falco appends new messages to the log file.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox."

Solution

Shell into the worker node with the following command:

$ vagrant ssh kube-worker-1

Inspect the command and arguments of the running Pod named malicious. You will see that it tries to append a message to the file /etc/threat:

$ kubectl get pod malicious -o jsonpath='{.spec.containers[0].args}'

...

spec:

containers:

- args:

- /bin/sh

- -c

- while true; do echo "attacker intrusion" >> /etc/threat; \

sleep 5; done

...

One of Falco’s default rules monitors file operations that try to write to the /etc directory. You can find a message for every write attempt in standard output:

$ sudo journalctl -fu falco

Jan 24 23:40:18 kube-worker-1 falco[8575]: 23:40:18.359740123: Error \

File below /etc opened for writing (user=<NA> user_loginuid=-1 \

command=sh -c while true; do echo "attacker intrusion" >> /etc/threat; \

sleep 5; done pid=9763 parent=<NA> pcmdline=<NA> file=/etc/threat \

program=sh gparent=<NA> ggparent=<NA> gggparent=<NA> \

container_id=e72a6dbb63b8 image=docker.io/library/alpine)

...

Find the rule that produces the message in /etc/falco/falco_rules.yaml by searching for the string “etc opened for writing.” The rule looks as follows:

- rule: Write below etc

desc: an attempt to write to any file below /etc

condition: write_etc_common

output: "File below /etc opened for writing (user=%user.name \

user_loginuid=%user.loginuid command=%proc.cmdline \

pid=%proc.pid parent=%proc.pname pcmdline=%proc.pcmdline \

file=%fd.name program=%proc.name gparent=%proc.aname[2] \

ggparent=%proc.aname[3] gggparent=%proc.aname[4] \

container_id=%container.id image=%container.image.repository)"

priority: ERROR

tags: [filesystem, mitre_persistence]Copy the rule to the file /etc/falco/falco_rules.local.yaml and modify the output definition, as follows:

- rule: Write below etc

desc: an attempt to write to any file below /etc

condition: write_etc_common

output: "%evt.time,%user.name,%container.id"

priority: ERROR

tags: [filesystem, mitre_persistence]Restart the Falco service, and find the changed output in the Falco logs:

$ sudo systemctl restart falco $ sudo journalctl -fu falco Jan 24 23:48:18 kube-worker-1 falco[17488]: 23:48:18.516903001: \ Error 23:48:18.516903001,<NA>,e72a6dbb63b8 ...

Edit the file /etc/falco/falco.yaml to change the output channel. Disable standard output, enable file output, and point the file_output attribute to the file /var/log/falco.log. The resulting configuration will look like the following:

file_output:

enabled: true

keep_alive: false

filename: /var/log/falco.log

stdout_output:

enabled: falseThe log file will now append Falco log:

$ sudo tail -f /var/log/falco.log

00:10:30.425084165: Error 00:10:30.425084165,<NA>,e72a6dbb63b8

...

Exit out of the VM:

$ exit

Question 29

Navigate to the directory app-a/ch07/immutable-container of the checked-out GitHub repository bmuschko/cks-study-guide. Execute the command kubectl apply -f setup.yaml.

Inspect the Pod created by the YAML manifest in the default namespace. Make relevant changes to the Pod so that its container can be considered immutable.

Solution

Create the Pod named hash from the setup.yaml file. The command running in its container appends a hash to a file at /var/config/hash.txt in an infinite loop:

$ kubectl apply -f setup.yaml pod/hash created $ kubectl get pod hash NAME READY STATUS RESTARTS AGE hash 1/1 Running 0 27s $ kubectl exec -it hash -- /bin/sh / # ls /var/config/hash.txt /var/config/hash.txt

To make the container immutable, you will have to add configuration to the existing Pod definition. You have to set the root filesystem to read-only access and mount a Volume to the path /var/config to allow writing to the file named hash.txt. The resulting YAML manifest could look as follows:

apiVersion: v1

kind: Pod

metadata:

name: hash

spec:

containers:

- name: hash

image: alpine:3.17.1

securityContext:

readOnlyRootFilesystem: true

volumeMounts:

- name: hash-vol

mountPath: /var/config

command: ["sh", "-c", "if [ ! -d /var/config ]; then mkdir -p \

/var/config; fi; while true; do echo $RANDOM | md5sum \

| head -c 20 >> /var/config/hash.txt; sleep 20; done"]

volumes:

- name: hash-vol

emptyDir: {}Question 30

Navigate to the directory app-a/ch07/audit-log of the checked-out GitHub repository bmuschko/cks-study-guide. Start up the VMs running the cluster using the command vagrant up. The cluster consists of a single control plane node named kube-control-plane and one worker node named kube-worker-1. Once done, shut down the cluster using vagrant destroy -f.

Edit the existing audit policy file at /etc/kubernetes/audit/rules/audit-policy.yaml. Add a rule that logs events for ConfigMaps and Secrets at the Metadata level. Add another rule that logs events for Services at the Request level.

Configure the API server to consume the audit policy file. Logs should be written to the file /var/log/kubernetes/audit/logs/apiserver.log. Define a maximum number of five days to retain audit log files.

Ensure that the log file has been created and contains at least one entry that matches the events configured.

Prerequisite: This exercise requires the installation of the tools Vagrant and VirtualBox.

Solution

Shell into the control plane node with the following command:

$ vagrant ssh kube-control-plane

Edit the existing audit policy file at /etc/kubernetes/audit/rules/audit-policy.yaml. Add the rules asked about in the instructions. The content of the final audit policy file could look as follows:

apiVersion: audit.k8s.io/v1

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: ""

resources: ["pods"]

- level: Metadata

resources:

- group: ""

resources: ["secrets", "configmaps"]

- level: Request

resources:

- group: ""

resources: ["services"]Configure the API server to consume the audit policy file by editing the file /etc/kubernetes/manifests/kube-apiserver.yaml. Provide additional options, as requested. The relevant configuration needed is as follows:

...

spec:

containers:

- command:

- kube-apiserver

- --audit-policy-file=/etc/kubernetes/audit/rules/audit-policy.yaml

- --audit-log-path=/var/log/kubernetes/audit/logs/apiserver.log

- --audit-log-maxage=5

...

volumeMounts:

- mountPath: /etc/kubernetes/audit/rules/audit-policy.yaml

name: audit

readOnly: true

- mountPath: /var/log/kubernetes/audit/logs/

name: audit-log

readOnly: false

...

volumes:

- name: audit

hostPath:

path: /etc/kubernetes/audit/rules/audit-policy.yaml

type: File

- name: audit-log

hostPath:

path: /var/log/kubernetes/audit/logs/

type: DirectoryOrCreateOne of the logged resources is a ConfigMap on the Metadata level. The following command creates an exemplary ConfigMap object:

$ kubectl create configmap db-user --from-literal=username=tom

configmap/db-user created

The audit log file will now contain an entry for the event:

$ sudo cat /var/log/kubernetes/audit/logs/apiserver.log

{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata", \

"auditID":"1fbb409a-3815-4da8-8a5e-d71c728b98b1","stage": \

"ResponseComplete","requestURI":"/api/v1/namespaces/default/configmaps? \

fieldManager=kubectl-create\u0026fieldValidation=Strict","verb": \

"create","user":{"username":"kubernetes-admin","groups": \

["system:masters","system:authenticated"]},"sourceIPs": \

["192.168.56.10"], "userAgent":"kubectl/v1.24.4 (linux/amd64) \

kubernetes/95ee5ab", "objectRef":{"resource":"configmaps", \

"namespace":"default", "name":"db-user","apiVersion":"v1"}, \

"responseStatus":{"metadata": {},"code":201}, \

"requestReceivedTimestamp":"2023-01-25T18:57:51.367219Z", \

"stageTimestamp":"2023-01-25T18:57:51.372094Z","annotations": \

{"authorization.k8s.io/decision":"allow", \

"authorization.k8s.io/reason":""}}

Exit out of the VM:

$ exit

Reference

Certified Kubernetes Security Specialist (CKS) Study Guide by Benjamin Muschko